Can you confirm if this happened to you again?

When i updated i tested and it deleted the files i can test again later the site is a production one i need to make a manual backup first.

Is it possible that when i updated there could be some kind of cache or something that made it use the outdated code?

Can you tell me if the error happens when you create backups from GUI or through scheduled google drive backups?

sry to bumb…

perhaps @ricardojds if you can… you can create screen video recording about this error

@die2mrw007 I just got this error yesterday. I installed cyberpanel latest with contabo vps last month. I setup google drive backup before one day. The first day backupworked properly. yesterday backup failed and my public_html got deleted. What’sthe solution here ? This is the worst that can happen to any site.

Kindly send me SSH and CyberPanel access of your server via PM.

For now I restored the files via first backup. I am using 2.1 Build 2 now. So is his related to update or anything else? If I run the update scripr now does it make any changes?

sorry to bumbed

sometimes cyberpanel developer do some update without changing the version

and yes it will fix or broke something &_&

that is no secret. about cyberpanel

same version

different installation day or update will result different bug or different improvment…

because developer hiding sub-build version or last update date

I’m a little more busy this day’s, hope to, in the next two weeks, get back to this, for now i’m backing up manualy with mysqldumps and tar.

Next week i will make a deploy in ubuntu 20 and i will check if the backups are ok in ubuntu.

one thing a noticed now repo powertools-for-cyberpanel is giving error, maybe some tools are missing and the backup dont run properly

I have this problem too with a Google Drive backup.

I have the version 2.1.2

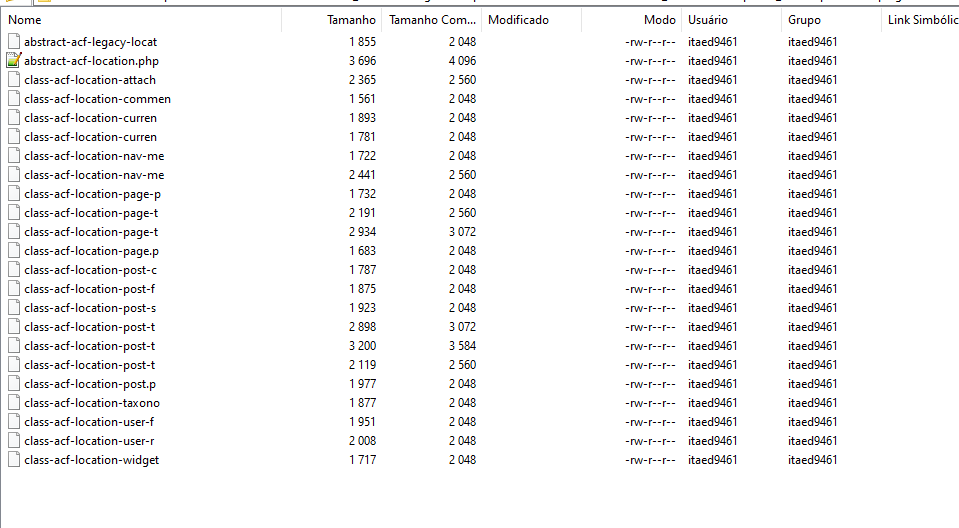

But, the worst problem is the backup that i’m trying to restore. A lot of files name have been cutted off.

Look

Jesus crist, i’m in a big trouble right now ![]()

Impossible to restore those backups. I lost a client website.

I really recommend you guys, if you have a wordpress website verify the plugins and themes files.

Update: I lost the client too.

Update 2: I was tested the normal backup and the same thing happens, file names are cutted of

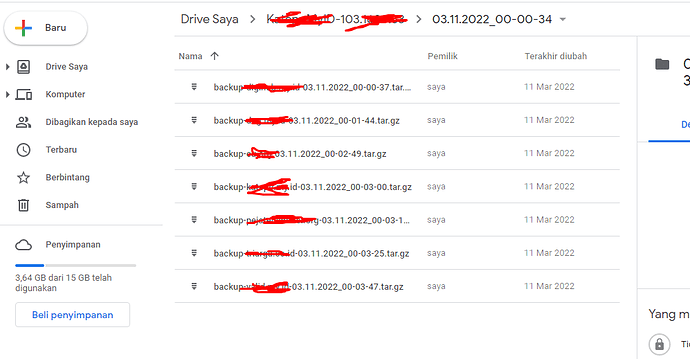

my gdrive backup… file are tar.gz

i test to download 1 file (random selection)

then i upload to to /home/backup

restore

everything fine

Good to know. I didn’t had the same luck. I checked another websites and the problem is the same. I made a manual zip for all websites for now

Hi everyone, hi started today making the second deploy i had to do, this time with ubuntu server to test my theory, unfornalty i was wrong it happened again in ubuntu.

Test scenario: created a text file in public_html with a String (14 bytes) Put the original site backup file (4.7GB in the public_html folder) (the package has “Enforce Disk Limits” unchecked)

What i found:

1 - If i keep the 4.7GB file owned by root:root when a run the backup the folder is deleted.

2 - If i change the file to be owned by the website user and group (AAAAA####) everything goes as planned.

3 - For the 14bytes text file it doesnt matter if it is owned by root or the website user

Can anyone how knows the code better find some sense in this? why when there is a big file owned by root public_html folder is deleted

Good info. Someone more knowledgeable will have to comment on why this happens, but you really should be having files within a website owned by the website user. I can’t see any reason to have them owned by root, and clearly this is one of probably many examples of what can break if that’s the case.

Its why it is advised to use site-specific FTP users rather than logging in via root - when you upload/change files, it does it as the website’s user rather than root.

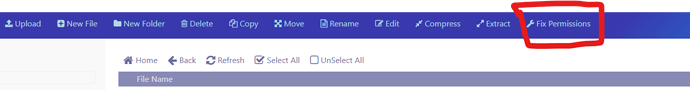

But, sometimes it is necessary to act as root (e.g. move a Duplicator backup file from an existing website to a newly created one). So, while I’m sure there’s a simple SSH command for this, whenever I move files around, I always use the “Fix Permissions” function in the File Manager afterwards.

Give that a try and then test out the backups to see if the problem persists. If not, we’ve found the problem and there should probably be some fix whereby Fix Permissions is run before a backup is initiated.

IMPORTANT ADITIONAL INFO

Just made another test, the issues may not be on the user and group but on gorup and others permition, i noticed that my backup file (4.7GB) add 600 permitions and the small file 644 so i tested changing it to 600 and user and group root and the folder public_html was deleted.

Resuming when there is a file that the website user/group cant read it deletes the public_html folder.

@ricardojds Can you please try running Fix Permissions as I detailed in my comment above?

Fix permission makes all file 644 and put the website user and group, after that the backup run ok

A work around could be running fix permissions before the backup but this has security problems because for configuration files we may what to put the permissions at 400