ldavid

July 31, 2022, 3:32pm

1

Hello - Cyberpanel is fantastic - on year 3 now. - Thank you!

I have a new server with a custom partition Ubuntu server:

root@gpl:/# df -ih

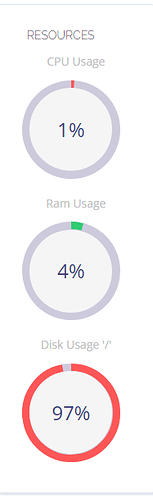

Question - where is the folder that cyberpanel is showing 92% full?

not sure why you post df -ih inode count? Like what’s the purpose? df -h gives you disk space. Also not sure why you have 256GB of swap, if I fill out that much swap on a webserver I’d be super concerned.

In any case, you can debug the issue by running the very command cyberpanel use for that count ( which isn’t df or du )

python3

>>> import psutil

>>> psutil.disk_usage('/')

>>> exit()

In any case, just looking at the inode count of /, which is 640k, and considering /var has 6.3M inodes, I’d say not sure about your partitioning reasoning. psutil is not exactly super precise or recognize weird mounts and stuff, but it’s usually not THAT far off.

ldavid

August 13, 2022, 3:24pm

3

Hello - thank you for the fast reply and yes df -h is what I should have posted:

the SWAP - I made with the rule to double RAM

The server I am using is the AMD 5950xhttps://www.hetzner.com/dedicated-rootserver/ax101/configurator#/

can I resize anything to fix the 97% usage going higher?

or should I re-install ubuntu and use default values for everything?

Well it’s the ubuntu desktop partitioning, the SWAP double the RAM rule is for laptop that hibernate SwapFaq - Community Help Wiki the swap for a 128 gb server that don’t hibernate… 11 gb. Does your server hibernate? With a lid you close and and everything? I’m curious actually.

Anyway, the whole 63gb of /cgroup, /dev and all that /snap nonsense is for Ubuntu’s desktop SNAP appstore system https://snapcraft.io/ , which suck and need to go from any server, with all the desktop stuff : policykit, multipath, udisk2, etc ( there’s a ton, ubuntu desktop is very bloated ).

There’s basically no easy way to run a server out of 2% disk on / unless you start mounting every log folders to where there’s space, just 1.5gb on /tmp is unworkable for almost any server. Be very wary of ubuntu desktop images on server, because of the whole SNAP thing, it’s very different than default debian desktop.

You can fix almost all of this in /etc/fstab

ldavid

August 15, 2022, 2:45pm

6

ahh, that is my mistake.

root@gpl:/# df

ldavid

August 15, 2022, 2:48pm

7

Here is fstab:

/dev/disk/by-uuid/b0745115-d688-456f-849b-cc0164865fb3 / ext4 defaults 0 1

/dev/disk/by-uuid/062288f2-f689-4dd0-8216-af27948be57d /home ext4 defaults 0 1

/dev/disk/by-uuid/0f685e88-a3d5-4f83-b01e-863c0a155875 /var ext4 defaults 0 1

/dev/disk/by-uuid/29276026-aac0-4911-8b08-2882b1c8073f /boot ext4 defaults 0 1

/dev/disk/by-uuid/6388-CCBB /boot/efi vfat defaults 0 1

/dev/disk/by-uuid/977ec893-aca5-4d18-9e42-fa6082438cf5 /backup_disk ext4 defaults 0 1

ah can’t help you, my post get downvoted and eventually disappear on this community by dreamer and master. I’m jumping ship.

Good luck, maybe if you post df output one more time like shoaibkk suggested it’ll fix everything!

ldavid

August 19, 2022, 1:27pm

9

Server seems to run fine but the drive says nearly full. here is df: